Two Hypotheses per Test

In our book we describe the TDD practice of Listening to the Tests, in which the ease, or otherwise, of writing tests is a source of feedback on design quality. I've found it useful to think about this practice another way: that, when doing test-driven development, each test we write records two hypotheses:

System Hypothesis: the code performs the externally visible behaviour verified by the test.

Running the test code tests this hypothesis. It will be false when we first write the test. It becomes true when we've written an implementation that made the test pass. It should remain true as we make further changes to the system.

If we need to change or remove existing behaviour, we first change the tests to record our new implementation hypothesis.

Model Hypothesis: the domain model encompasses the structures required to implement that behaviour.

Writing the test code tests this hypothesis. It will usually be true when adding features to a mature, well-factored system. It will usually be false as we grow the system early in its development. It may be falsified by the addition of significant new features. (Or rather, a significant new feature is one that falsifies this hypothesis and causes us to rethink our model).

If the test is difficult to write or make pass, then we must first come up with a new model, refactor existing code to fit that model and then write the test.

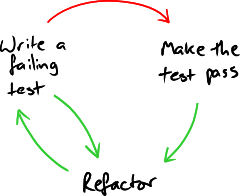

I find that being mindful of which hypothesis I'm currently testing as we go through the test/implement/refactor cycle makes me more receptive to the feedback that the tests give me. Finding that our code fails the Model Hypothesis is a good thing: we've learned more about the domain and must capture what we've learned in out domain model.